Patrick asked me to look into the compression options offered by Dracut for initramfs compression. This is the results of that research, written here since the results are a bit lengthy and require the ability to post images for sharing the charts I made.

tl;dr: I think we should use xz compression. It’s acceptably fast, it compresses way better, and there doesn’t seem to be any compelling reason to avoid it.

The initramfs has to be decompressed by the kernel on boot, so I only researched compression algorithms supported by the kernel in Debian Bookworm. (Trixie supports the exact same compression algorithms, so this research should apply to Trixie as well, assuming the compression utilities perform similarly in Trixie to how they perform in Bookworm, which I find likely given the age of these utilities.)

Bookworm’s kernel and Dracut both support the following compression algorithms:

- gzip (this is what we’re using now)

- lz4

- lzma

- lzo

- xz

- zstd

- cat (uncompressed, taken into consideration to provide a best-case scenario for time and a worst-case scenario for size)

Dracut also supports bzip2, but Bookworm’s and Trixie’s kernels both did not appear to support that.

To determine which algorithm was most likely desirable, I benchmarked them against each other. The following testing methodology was used:

- Create a file,

/etc/dracut.conf.d/99-compress.confto set the compression algorithm in. - For each compression algorithm, change

99-compress.confto specify the desired algorithm, then runtime sudo dracut --forcethree times. - Record the output of

timeafter each dracut invocation. - Record the size of the output initramfs after each dracut invocation.

In all instances, the file size of the output initramfs was identical (to within a kilobyte at least) across all three runs of Dracut when using a single compression algorithm, so I only recorded file size once per algorithm. All tests were run in a KVM/QEMU virtual machine with 4 virtual CPUs, 4 GB RAM, and all host CPU features passed through to the guest (-cpu host). The host system has an i9-14900HX processor. The raw results of the benchmarks are as follows:

- gzip

- run 1:

0.06s user 0.10s system 1% cpu 12.240 total - run 2:

0.01s user 0.02s system 0% cpu 10.907 total - run 3:

0.01s user 0.02s system 0% cpu 10.912 total - file size:

39724K

- run 1:

- lz4

- run 1:

0.02s user 0.01s system 0% cpu 7.476 total - run 2:

0.02s user 0.01s system 0% cpu 7.408 total - run 3:

0.01s user 0.02s system 0% cpu 7.541 total - file size:

48064K

- run 1:

- lzma

- run 1:

0.09s user 0.07s system 0% cpu 46.595 total - run 2:

0.01s user 0.02s system 0% cpu 45.677 total - run 3:

0.02s user 0.01s system 0% cpu 45.339 total - file size:

26292K

- run 1:

- lzop

- run 1:

0.09s user 0.08s system 0% cpu 38.810 total - run 2:

0.02s user 0.01s system 0% cpu 37.862 total - run 3:

0.01s user 0.02s system 0% cpu 37.837 total - file size:

44880K

- run 1:

- xz

- run 1:

0.09s user 0.08s system 1% cpu 11.655 total - run 2:

0.01s user 0.01s system 0% cpu 10.542 total - run 3:

0.02s user 0.01s system 0% cpu 10.571 total - file size:

28288K

- run 1:

- zstd

- run 1:

0.10s user 0.07s system 1% cpu 8.676 total - run 2:

0.08s user 0.09s system 2% cpu 7.680 total - run 3:

0.01s user 0.01s system 0% cpu 7.404 total - file size:

33248K

- run 1:

- cat (no compression, baseline)

- run 1:

0.09s user 0.08s system 3% cpu 5.279 total - run 2:

0.01s user 0.02s system 0% cpu 4.189 total - run 3:

0.01s user 0.02s system 0% cpu 4.252 total - file size:

136088K

- run 1:

I did not benchmark boot speed with each of the different algorithms used, although I did verify that the virtual machine booted with an initramfs made with each algorithm. The boot speed seemed pretty much the same to me with each algorithm, and would have been difficult to measure in an objective, reliable fashion. I will note, I may have noticed a very slight speedup during boot with the zstd algorithm.

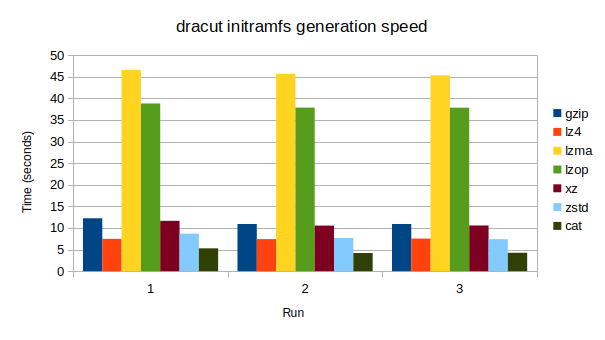

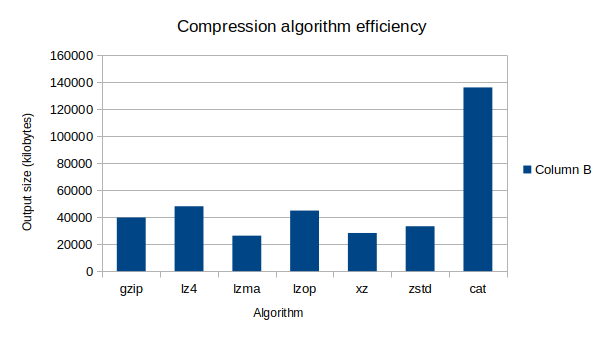

The following two graphs are provided to visualize the data above (created using LibreOffice):

Takeaways from the above data:

- From a size standpoint, lzma performed the best, lz4 performed the worst.

- From a speed standpoint, zstd and lz4 are about tied for best speed, while lzma is the worst for speed.

- lzo/lzop is certainly a bad choice - it approaches lzma in slowness while also making a file larger than gzip and almost as large as lz4. It’s the worst of all worlds combined.

- xz is probably the most compelling as far as a balance between good speed and good compression - it’s almost as good as lzma as far as size, while being just a hair faster than gzip.

- zstd is a bit of an improvement over gzip in size, while also being very very fast.

I am of the opinion that xz is the best choice here based on this data, as our existing compression speed has been acceptable and shaving off 2-3 seconds per initramfs generation doesn’t seem that compelling except perhaps when doing ARM builds of Kicksecure on x86_64 hardware.

It is worth noting, people who know much more about compression tools under the hood than I do have complaints about design flaws in xz, which are documented here. I do not believe the issues mentioned in this article are of concern for the following reasons:

- The article primarily relates to xz’s suitability for long-term archival. Kernel initramfs files aren’t really something where “long-term archival” is a concern.

- Most of the article focuses on xz’s lack of resiliency in the face of partial archive corruption. But we don’t care about this at all, we assume the initramfs is bit-for-bit identical to when it was created, and indeed in the future we will likely be signing the initramfs as part of Verified Boot (which will mandate that the initramfs be bit-for-bit idential to when it was created).

- Other parts of the article focus on compatibility issues with different versions of xz. This also is not a concern - as long as dracut produces an initramfs that the Linux kernel can read and boot with, things are compatible enough for us.

- The rest of the article appears to focus on various design decisions in xz that could have been made better. None of that is relevant for us though, since whatever feature set Dracut is using in xz is good enough to give it a very acceptable compression speed while also providing the second-smallest file size of any of the compression algorithms documented here. Even if xz could be better than it is, right now it’s better than everything else except maybe zstd if you really care about speed.

Someone else I saw did similar compression performance benchmarking and concluded that zstd was the best general-purpose algorithm, though the data they present shows that parallel xz actually performed better than zstd’s best compression in both compression size and speed. One concerning thing this article does point out though is that xz can use a lot of RAM. For this reason, I tried generating a dracut initramfs using xz compression in a Kicksecure-CLI VirtualBox VM with only 512 MB RAM. Memory consumption during the initramfs generation rose to a maximum of 318M according to htop, and went down to 263M once the initramfs was generated, meaning that dracut and whatever tools it ran (including xz) used about 55M of memory during the generation process. Only about 1.97M of swap ended up used. This is much more than both gzip and zstd (which both only require about 5M of memory), but seems acceptable to me. 55M is not that much, especially given that fwupd is just sitting there eating 97M while doing basically nothing.

As a final note, I checked cve.org to see if security vulnerabilities were found in either the xz or zstd compressors in the Linux kernel. I found no vulnerabilities for either algorithm (using the search terms “linuz kernel xz” and “linux kernel zstd”).

In conclusion, I believe xz is the best compression method for us to use with dracut, due to its slightly better performance and much better compression compared to our current default, gzip. zstd is my second choice, and may be what we want to use if we have problems with xz’s speed or memory consumption.